How do you make a web map?

This is the question I have been exploring for the past while as I try to expand my basic knowledge of GIS beyond the ARC. As a result of this exploration, I have put a few maps on-line, developed a keen passion for map making and an interest in expanding my skills. This post comprises a list of my mapping tools - those I currently use, those I am actively learning, and those on my list to learn. The geo-stack that I am working toward comprises of the following:

- PostGIS - works as the spatial database for storing and serving the data to either QGIS or TileMill

- QGIS + TileMill - QGIS is a great tool for analyzing and processing data, TileMill makes it look good and allows an export of MBTiles.

- PHP Tile Server - This serves the MBTiles onto the internet.

- Leaflet JS - Leaflet provides the user interface allowing someone on-line to interact with the MBTiles.

While I am learning the components of this stack, I use other things, described below.

Web mapping tools I use

Open Data - Open data forms the basis for most of my base map data. Open Street Map extracts allows me to build interesting, complete and free base maps, and various open data portals offer data for mashing. My goto data portals are:

- Edmonton's Open Data Portal

- Geogratis

- Government of Alberta's Open Data Portal

- Government of Canada Open Data Portal

- Stats Can

- Natural Earth

- Open Street Map Metro Extracts

- Open Street Map Country and Province Extracts

- Canadian GIS: a list a open/free data sources from Canadian GIS

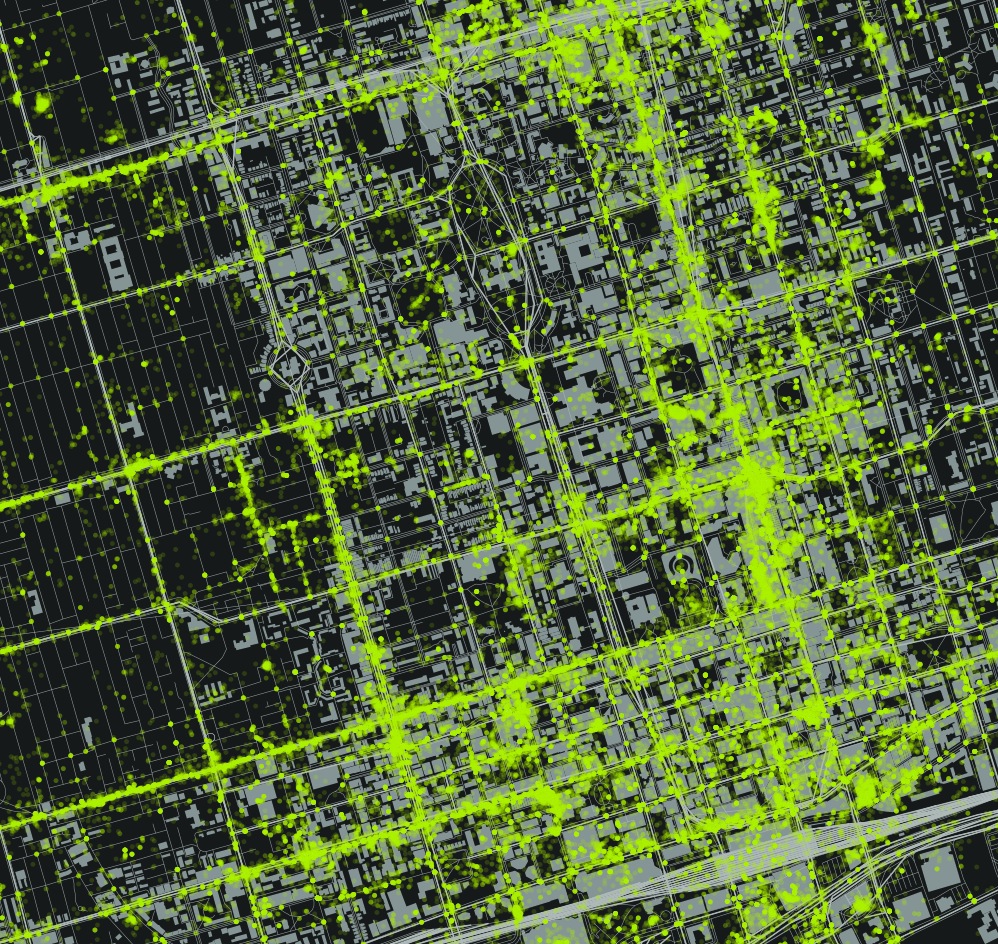

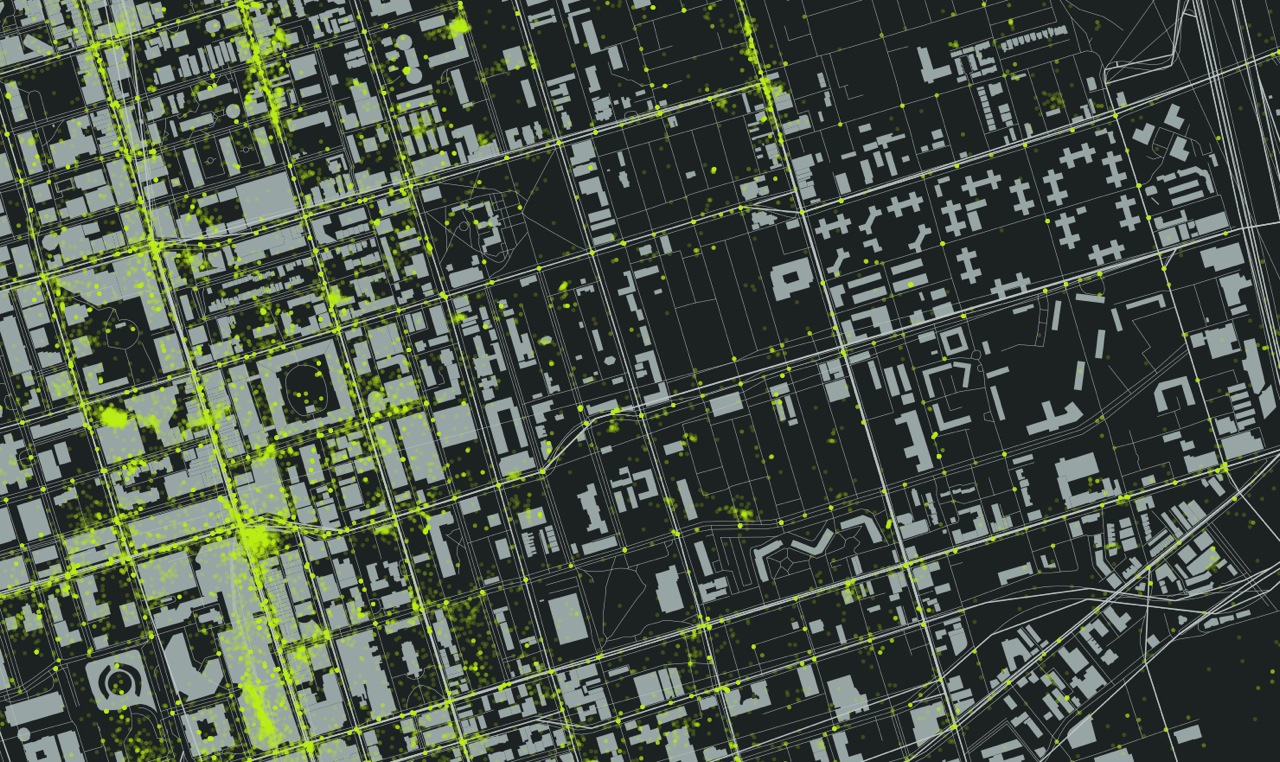

OpenStreetMap - I am a minor contributor to OSM, and mainly use it as a database for urban, Edmonton, data. For instance, an ongoing project is to classify each building by type (apartment, commercial, etc) in downtown Edmonton so that I can update my DTYEG map and create an accurate land use map of #yegdt. Cartographica - I mainly use Cartographica as a desktop geocoder, quick and dirty data viz tool. I love how simple it is to dump data into the view window, and how quick it renders large data sets. It is a light and easy way to quickly get a sense of a dataset, plus it has a 'live' map feed of OpenStreetMap or Bing. It can import or export to KML, and complete some lightweight data analysis like heat maps. QGIS - Where Cartographica is light, QGIS is robust. A free way to get a full GIS on your desktop, and because I run an iMac, the easiest way to do spatial analysis without loading a Windows VM (and much cheaper too, as in free). I love QGIS, but it requires a set of skills comparable to those used in ArcGIS. I am still building this skill set. TileMill - TileMill is awesome. A super easy to use map style machine by MapBox, TileMill uses CartoCSS (Cartographic Cascading Style Sheets) to code the look of each point, line, polygon and raster within your map. It renders maps fast and beautiful, and dumps them in a variety of formats, including MBTiles, which you can then load onto the MapBox site for a fully interactive map experience. MapBox - MapBox provides two services that I find vital - (1) web hosting and (2) base maps that can be styled. I am not yet skilled enough to take the MBTimes and put them online myself, so I rely on a MapBox subscription to host my maps. If I am working with a large geographic area, and am not yet skilled at dealing with huge data sets, so I also use MapBox's base map, from OSM, which can be made custom. Also, MapBox provides some great satellite imagery as a base map, and an awesome blog on what is new in mapping.

Web mapping tools I am learning

PostGIS - I learned recently that the cool kids pronounce this as Poist-jis NOT Post G-I-S. PostJis is hard and I don't really get it - it is a OSS project that adds support to geographic data within a PostSQL database. I have been working with a Refractions Research tutorial, and have been able to install PostgreSQL and enable PostGIS, but I am unfamiliar with SQL so I find it hard even knowing how to compose a command. Lots to learn here. My PostGIS resources include:

CartoDB - I love how CartoDB makes temporal data come alive. Check out this map of '7 Years of Tornado Data', and how you can almost pick out the season by the amount of tornado activity. Apparently you can style the map in CartCSS (which I can do), combine various data sets, and run SQL queries. Much to learn. Leaflet JS - "Leaflet is a modern open-source JavaScript library for mobile-friendly interactive maps". It's a UI that enables the user to interact with the MBTiles like: MBTiles > PHP Tile Server > Leaflet > User.

Web mapping tools I want to learn

Below I list a bunch of tools that I want to learn, but an still coming to grips as to what they do, and how they interact. For instance, I can picture the workflow of data from PostGIS to TileMill to MapBox, but I cannot picture the workflow from TileMill (MBTile output) to PHP Tile Server, and the role that JavaScript and HTML play in the creation of a hosted map (ok, I kinda get it, but not in a concrete, I have done this way). If I get anything wrong here (or anywhere in the post) please let me know - also, if I am missing a great resource, make a note of it in the comments. PHP Tile Server - PHP Tile Server translates the MBTile file onto the web and acts as an interface between the MBTiles and an UI such as Leaflet JS or even Google Maps. HTML - HTML provides the backbone of every website. Learning to code HTML would simply allow me to create and style the webpage that my MBTiles are displayed upon. JavaScript - Like HTMP, JS is a programming langauge the provide some sort of function to a website. Where HTML is static, JS is dynamic allowing the user to interact with elements of the website. For instance, in a mapping context, JS would allow me to define a set of layers that a user could turn off and on to expose of hide specific types of data on a map. Plan Your Place has a great interactive flood map of Calgary that illustrates this function.

GeoJSon - A JS derivative (as is TopoJSon) of JS that codes spatial data such as point, line, polygon. In the web mapping context it is a much more powerful format than ESRI Shape Files as it is lighter (i.e. quicker) and can be integrated into the code of the map.

Resources

This is not a complete list - in fact it is barely a list. Please add a comment to point out what I am missing.

- Code Academy - A free on-line coding tutorial that is interactive and problem based. They offer tutorials for JavaScript, HTML, PHP and help you learn how to build web projects. Very cool and free.

- GitHub Learn GeoJson - GitHub is a place where programmers, especially those working on the OSS space, keep their code for others to download, use and improve upon. This is made by Lyzi Diamond.

- Maptime! - An awesome list of mapping resources by Alan McConchie (@almccon) and Matthew McKenna (@mpmckenna8).

- Spatial Analysis On-line - As I try to remember my GIS courses, this is the on-line text that I reference to help me understand the analysis I want to run.

- Mapschool - Tom MacWright wrote this as a crash course in mapping for developers.

Colour and Maps

These are the colour palette websites that I reference:

Finally, NASA has a great 6 part series on colour theory called the "Subtleties of Color".